Adapt and Thrive: The Critical Role of a Living Workload Placement Strategy

Introduction

As Gartner-recognized consultants in workload placement strategy (WPS), we are responsible for bringing the full range of available options to our clients. Here’s some of what we’re seeing in the marketplace as these options proliferate.

First, Setting the Context

Two questions:

- Where does the WPS fit into the context of other strategies covering various domains in the business and in IT?

- What’s involved with making the WPS successful?

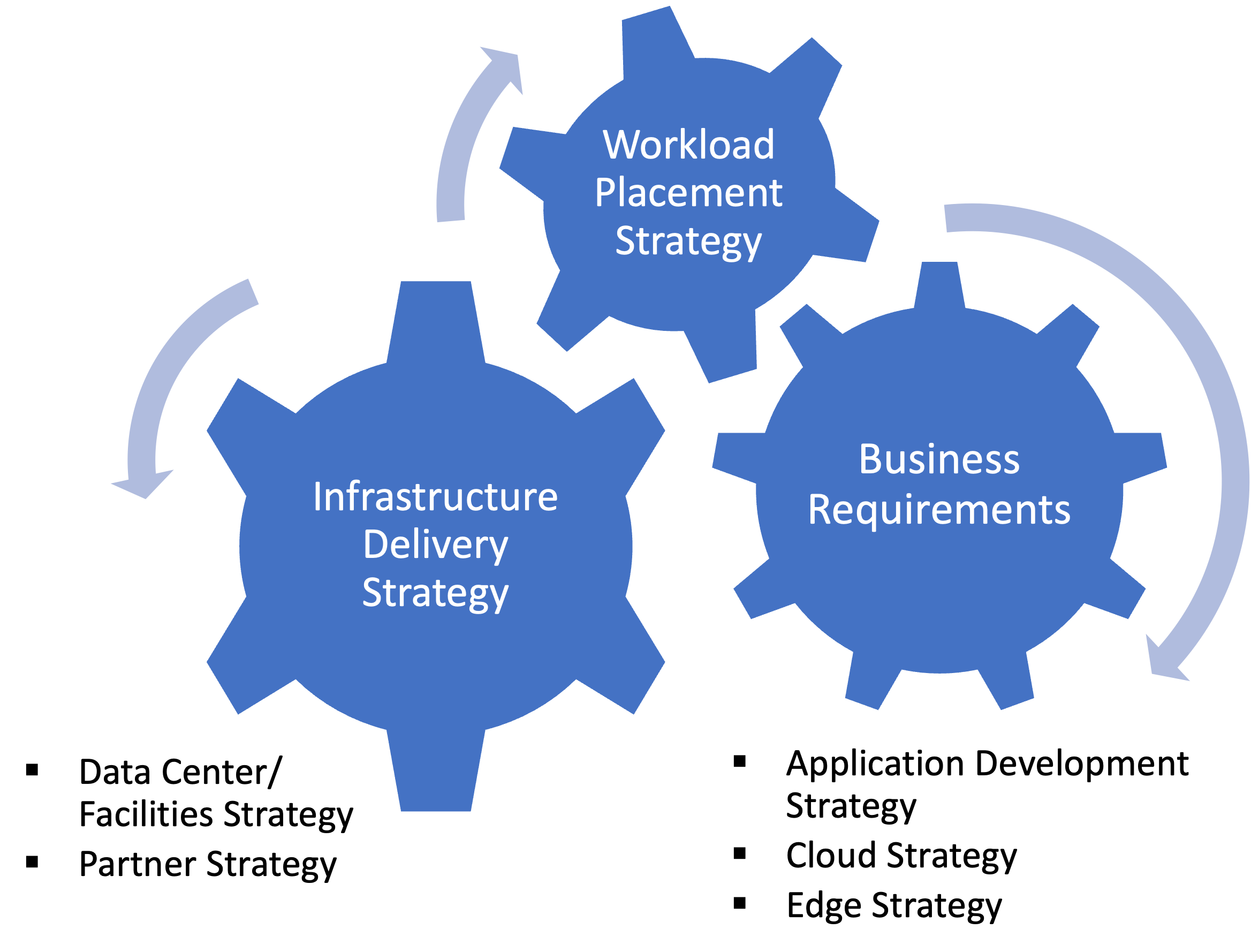

The Workload Placement Strategy must align with related strategies

In this simple view, the CIO and team respond to (or better, anticipate) the needs of the business with an application development strategy which today is most likely largely reliant on cloud development. Edge is of emerging, if not yet equal, importance.

Where and how workload is ultimately delivered also depends on the selection of partners – cloud and perhaps colocation. Your data center strategy will reflect your firm’s position about whether to retain on-premises facilities (for any one of several good reasons) or whether you have decided to take advantage of the scale and interconnection possibilities that colocation provides.

The critical components of workload placement strategy

We must

- Understand current workload: its characteristics and interdependencies- authoritatively

- Understand the business: opportunities and challenges, and the direction as we advance- which together we can translate into guiding principles

- Build a decision model which

- considers both local and global optimums. For example, it may be “cheaper” to locate a given workload on a platform, but if you don’t have the tools or skills to manage it, the price tag increases pretty quickly

- remains valuable going forward as the needs of the business evolve, and the marketplace provides new possibilities

- Understand the options available in the marketplace

This post focuses on the new and evolving options for both existing workloads and the development of new workloads. All of us need to understand them: even if we choose not to leverage them, we have competition that might.

We’re all hybrid now. Perhaps not all. But if your revenues are $100M or more, there’s a very high probability that you have workload in the cloud as well as some legacy workload which hasn’t been migrated- or for which no migration is foreseen because there is no business case.

“The data center is no longer the center of data.” Hybrid means that, for most of us, the data center is one node on the infrastructure mesh which links to some configuration of cloud providers, colocation facilities, edge instances or other data centers. Some have called data gravity the biggest implementation issue in digital transformation. Single biggest or not, it is significant and is growing.

Cloud native development is becoming the rule. By 2025, Gartner estimates that over 95% of new digital workloads will be deployed on cloud-native platforms, up from 30% in 2021.[i] Even if they overstate the rate of growth, the direction is clear.

Requirements are continually changing. “By 2027, 85% of the workload placements made before 2022 will no longer be optimal due to changing requirements.” As recently as March 2023, Gartner holds to this assumption.

Specifically in this short piece, we will cover developments in

- the cloud

- distributed cloud, and edge

- specialized infrastructure.

Industry clouds are emerging rapidly

A terrific description comes from Jujhar Singh, General Manager of Salesforce’s industries business:

industry clouds…are modular building blocks that actually can speed up the development of industry-specific digital solutions… industry cloud platforms are unique in the sense that they combine software, platform, and infrastructure-as-a-service capabilities to deliver specific solutions for industry use cases. They’re bundled with data models, industry processes, tools, and applications… they are composable. They can be used in conjunction with your existing stack.[ii]

Gartner expects that by 2027, enterprises will use industry cloud platforms to accelerate more than 50% of their critical business initiatives, whereas in 2021, it was less than 10%.[iii]

Distributed cloud grows steadily, if not spectacularly

VMware’s excellent definition:

A distributed cloud is an architecture where multiple clouds are used to meet compliance needs, performance requirements, or support edge computing while being centrally managed from the public cloud provider.[iv]

Both Microsoft AzureStack and AWS Outpost have gained enough traction and client support to be considered for use cases where the common cloud control plane is desirable, but performance or data sovereignty requirements don’t permit a remote cloud placement.

While this technology model has not yet reached a dominant position, it is absolutely emerging as a real option. The forecasts we looked at seemed to converge around a 12% annual CAGR between 2022 and 2027.

Variable consumption models grow toward mainstream status

According to HPE,

consumption-based IT allows you to move from a costly capital expenditure model to a variable cost structure. The overall effect is similar to a public cloud-like experience that allows you to keep a tighter control on your operations in-house…According to 451 Research, on average 59 percent of businesses overprovision IT resources.”[v]

Gartner assumes that in “2025, 40% of newly procured premises-based compute will be consumed as a service, up from less than 10% in 2021.”[vi]

Most major players have an offering, including Cisco, Dell, HPE, Lenovo, NetApp, and Pure Storage.

High Performance Computing (HPC) impacts the physical data center as well

Our friends at BRUNS-PAK tell us that some of their clients are deploying HPC in an existing legacy data center and challenged with electrical/mechanical issues. Some have been running a static 1-10kw/rack for many years that are now deploying 20-30kw/rack and those with research computing, deploying in excess of 55-60kw/rack with “future plans to deploy 90-100kw/rack.

What BRUNS-PAK does is to assess operating conditions and reliabilities (including kW per cabinet), availability to increase the kW per cabinet (CFD model), and deliver recommendations, cost estimates, and schedule associated with improvements. A short video is available here.

Developments in specialized infrastructure: AI, data and analytics, and even quantum

…cloud is lowering the barrier to entry by providing easy and on-demand access to these highly specialized compute infrastructures. These technologies offer capabilities that enable complex simulations, massive data analysis, and intelligence, which can be leveraged across all industries…[vii]

Notable use cases include Artificial Intelligence/Machine Learning (AI/ML), High Performance Computing (already discussed), Big Data and Analytics, real-time and latency sensitive applications, and video processing and rendering.

A few notes:

- AI infrastructure is heavily dependent on frameworks. AWS tells us that “70 percent of ML projects rely on ML frameworks such [as] TensorFlow, PyTorch, and Apache MXNet, so infrastructure that supports and optimizes these frameworks is a must.” Additional considerations in hosting decisions include performance, cost, management considerations and the ability to port code. [viii]

- Big Data and Analytics: According to HPCWire, “Storage solutions must be optimized… you must decide whether cloud or on-premises storage will be most cost-effective…Servers and network hardware must have the necessary processing power and throughput to handle massive quantities of data in real-time”. They note that bottlenecks in storage media, scalability issues, and slow network performance can become huge impediments to success. [ix]

- Deep Learning (DL): from the same HPCWire source: “You can run ML on a non-GPU-accelerated cluster, but DL typically requires GPU-based systems. And training requires the ability to support ingest, egress, and processing of massive datasets.”

- Edge: real-time and latency-sensitive applications may utilize edge computing devices, -performance networking equipment, and in-memory processing technologies

- Video processing and rendering can benefit from specialized infrastructure like GPUs, dedicated video processors, and high-performance storage systems.

- Quantum: early, but it’s happening:

“The first quantum computer in healthcare, anticipated to be completed in early 2023, is a key part of the two organizations’10-year partnership aimed at fundamentally advancing the pace of biomedical research through high-performance computing. Announced in 2021, the Cleveland Clinic-IBM Discovery Accelerator is a joint center that leverages Cleveland Clinic’s medical expertise with the technology expertise of IBM, including its leadership in quantum computing.”[x]

GTSG wants to help build the workload placement strategy

We bring three essential commitments to any workload placement strategy engagement:

- We dig deep into the workload interdependencies among applications, databases and servers, which can impact performance if the execution venue is changed

- As we also provide managed services, we bring a “runtime sensibility” to the analysis. In plain English, no one around here does anything on a strategy engagement without Day Two top-of-mind.

- We are sure to bring to the engagement the most current proven options available– whether it’s the full breadth of cloud providers (including industry options), specialized infrastructure options, or colocation facilities.

* * * * *

We hope you have found value in this entry. If you have questions, please write us at Partners@gtsg.com and we’ll set up a discussion. Thank you.

[i] https://www.gartner.com/en/newsroom/press-releases/2021-11-10-gartner-says-cloud-will-be-the-centerpiece-of-new-digital-experiences, retrieved 04.21.23

[ii] https://www2.deloitte.com/content/dam/Deloitte/global/Documents/Technology/us-cloud-industry-clouds-composable-industry-specific-solutions-Deloitte-on-cloud-podcast.pdf, retrieved 04.21.23

[iii] https://www.gartner.com/en/information-technology/insights/top-technology-trends, retrieved 04.21.23

[iv] What is a Distributed Cloud? | VMware Glossary, retrieved 04.26.23

[v] IT consumption: A primer for your business | HPE, retrieved 04.26.23

[vi] https://www.gartner.com/en/articles/4-predictions-for-i-o-leaders-on-the-path-to-digital-infrastructure, retrieved 04.21.23

[vii] https://www.forrester.com/blogs/cloud-doesnt-obliterate-specialized-compute-it-enables-it/, retrieved 04.26.23

[viii] https://d1.awsstatic.com/psc-digital/2021/gc-400/ml-infrastructure/ML-Infrastructure.pdf?trk=d9ff33c8-2afb-4f49-b85e-1ffcdcabafa9, retrieved 04.21.23

[ix] Overcoming Challenges to Big Data Analytics Workloads with Well-Designed Infrastructure Overcome Challenges to Big Data Analytics w/ Infrastructure (hpcwire.com), 04.21.23

[x] https://newsroom.clevelandclinic.org/2022/10/18/cleveland-clinic-and-ibm-begin-installation-of-ibm-quantum-system-one/, retrieved 04.21.23