A Holistic Approach to IBM Mainframe Hardware and Software Cost Reduction

What Success Looks Like

In early November 2017, a financial services firm was “instructed” by its outsourcing provider that its CPU was out of capacity. The outsourcer told the client that they needed to approve an engine upgrade by November 30, in order to accommodate the hardware provider’s December 31 financial year-end. This would have been a significant unplanned capital outlay, which became known to the firm’s financial organization well after budgets for 2018 were locked.

Our client, who managed the relationship with the outsourcer, declined their request. He turned to GTSG hoping to create some headroom and to buy some time – and to get an unbiased view of the facts.

Mainframe operations were competently and professionally managed by the outsourcer- and this is the key- to the terms of their contract. Nonetheless, our performance, workload and capacity management specialists found adequate headroom to avoid the upgrade. Several recommendations were made, including modifications to WLM, DB2 zParms and buffer pools, CICS parameters and additional adjustments. These conclusions were accepted by the outsourcer; there have been no service impacts.

As of May 2021, 42 months after the (theoretical) need was identified, the expenditure for the upgrade has not been required. Payback on the engagement is conservatively 5:1.

Your Priorities and Our Program

If you’re reading this short paper, it’s likely for one of two reasons:

- You are under the steadily increasing cost pressure that has been the rule, since the economic crisis of 2008, exacerbated by the health crisis of 2020 and following,

- The demands of your business require mobile applications, you recognize the value of the power and resilience of the mainframe behind the “storefront” – but still have demands for cost containment.

Even in the most mainframe-friendly shops, availability of budget for additional capacity is at a premium. Skilled resources are stretched, such that once-common performance management disciplines have been pushed aside by daily priorities.

“Throwing hardware” at a perceived capacity problem has never been a solution. The most it has ever done was to provide a short term “band aid,” but today, most shops don’t even have the funding available for that… and if they do, it’s not fun to ask.

We frequently observe shops where performance and capacity management simply has not been a priority. This results in problems that “sneak up” on you. SLAs are missed; help desk lines are ringing more often; other performance issues are brought to the forefront which may not even have formal SLAs. We see this both at the infrastructure and application layers.

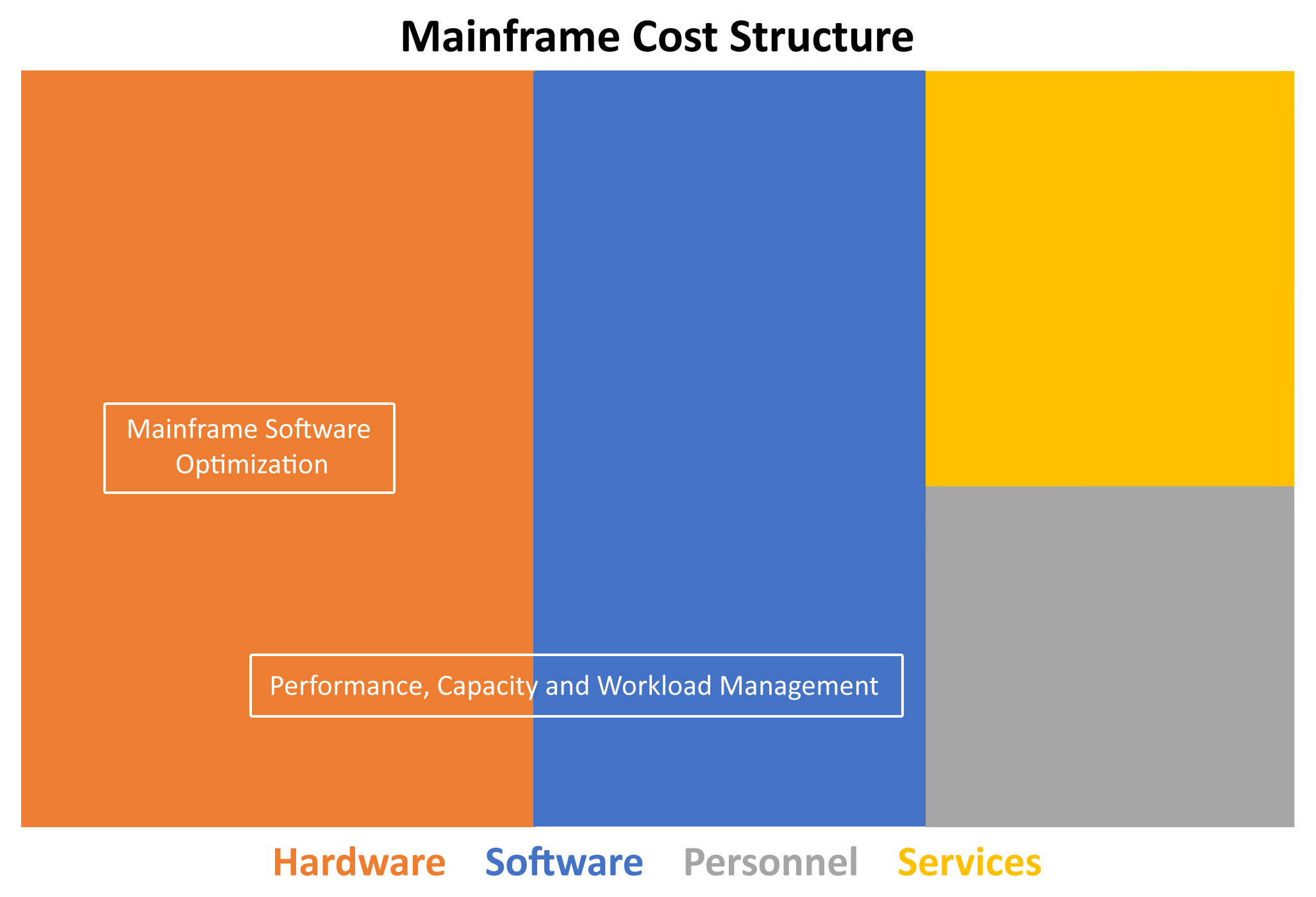

In the remainder of this paper, we address the two of the three major areas in which GTSG can help a mainframe shop achieve significant cost reduction:

- Performance, capacity and workload management: still the foundation of what we do. It addresses directly the 31% of mainframe cost structure which Gartner tells us, on average, is attributable to hardware. This baseline practice also impacts the software stack (40% of the cost structure), as most shops are still managing to a rolling four-hour average.

Software Portfolio Optimization: what has changed since we first wrote this paper is the software provider marketplace. Consolidations have encouraged some to seek a surprising level of price increase at contract renewal time. In some cases, mainframe shops have met this challenge with a wholesale replacement of the provider’s software. But in other cases, they have been frustrated by an inability to migrate before the contract renewal date. Long-term planning becomes essential to avoiding these price increases, some of which are reported at 500% in the initial offer.

GTSG also helps with labor-based cost reduction (the remaining 29% of cost) with our flexible staffing models. For more about our skills, have a look at our paper Navigating the Future.

Performance, Capacity and Workload Management

Performance Management

is the practice of iteratively eliminating or reducing the consumption patterns of a given job, schedule, transaction, or query. “Tuning” is a term commonly used to describe this process; it is MIPS elimination/reduction – plain and simple.

The scope of this analysis includes infrastructure, applications, and scheduling (where elapsed times are of great concern). We take a tactical, task-oriented approach to resource consumption reductions in combination with a more strategic, process- oriented approach for the future. We look to exploit enhancements introduced in more recent generations of mainframes.

We are after all consultants, so our approach begins with an assessment, in which we review the current environment, identify and prioritize opportunities based on benefit relative to level of effort, so that you can start to get relief that you need. We know we’ve been successful when you’re performing the same, or more work with less computing resource- just as in the success story with which we opened the paper.

Workload Management

is the practice of prioritizing workloads and delivering computing resources where they are needed to meet SLAs. We focus on workload prioritization, SLA achievement, batch schedule optimization, and “doing more with what you have” approaches to distributing computing resources.

We leverage the discovery work done in the performance management section described above, assess and re-engineer Workload Manager, review critical path batch schedules, and offer insights on obsolete processes & jobs, leveraging new technology where appropriate.

Workload Management is successful when we deliver enough computing resource to a given workload to allow it to meet its defined SLA (explicit or implicit).

Capacity Management

is the practice of modeling future computing resource requirements based upon historical consumption, achievement of SLAs, business forecasts, the effects of the tuning work, and anticipated business events. We take a “business driven” approach, creating a correlation between consumption and business drivers: i.e., what business activity (selling widgets) drives consumption (impact on CPU) of every incremental 1000 widgets sold. We consider known future events– acquisition, decommission, business increases/decreases, seasonal impacts, etc. – not just a line that trends by ‘x%.’

We also integrate our Performance and Workload Management efforts into future projections, validating projections as they become actual consumption – and then assess variances for integration back into the model.

Capacity Management is successful when it can accurately predict the need for additional computing resource before effective Workload Management becomes impossible.

Obviously, there are close relationships among the three. Workload Management becomes more difficult when the Capacity is improperly planned; Capacity Management predictions are skewed if savings from tuning are not accounted for; and SLAs are missed by inadequate Workload Management. This leads to a false perception – either that the capacity program is flawed or that more hardware is needed.

Software Portfolio Optimization

Marketplace Understanding

GTSG has its origins in 1988 as a team of systems programmers. While the firm has evolved significantly, mainframe has been mission-critical to the firm every day of our 33 years.

We serve a broad customer base which includes smaller shops (200 MIPS), midsized shops (up to 8-10,000 MIPS), and some of the largest shops in the world.

This broad experience combined with our relationships with Tier 1 providers (including two of the three highest ranked outsourcers for mainframe according to Gartner) keeps us at the forefront of developments in the mainframe software marketplace.

The current dynamic finds us engaged in a wave of competitive displacement activity driven largely by changes in pricing models following recent mergers. We also maintain connections with key software providers, including Micro Focus, Broadcom/CA Technologies, IBM, HCL Technologies (who have acquired some of the IBM portfolio), Rocket Software, BMC and BMC Compuware Software, GT Software and Stefanini.

How we help: the analysis

The Four-Step Process for Rationalization

Step One: Eliminate tools used by a small audience. The sheer size of the audience is not the only determinant of importance but it’s a start, and this is after all a cost reduction initiative. Survey all of the tools; quantify the audience size; assess the impact of possible elimination; propose alternatives if the impact cost trumps the tool cost.

Step Two: Replace a product with an existing feature. z/OS has numerous features now that might not have been available when you purchased a specific-function product. We exploit all of these inherent features in a cost-pressured environment. This involves training of the user audience – but the payback is often substantial. Politically, it’s hard for anyone to argue against using a robust feature for which you’re already paying. Performance monitors come to mind especially in decommission scenarios: a client can potentially replace a full suite of CPU-consuming products with a simpler and less intrusive RMF from IBM, which also carries a cost, but not like some other performance suites..

Step Three: Competitive displacement of a low use product. Picture an expensive product used by a small group within your organization. If you can’t do without the product (Step One) and can’t replace it with a built-in z/OS feature (Step Two), consider a competitive displacement where you swap Vendor A for Vendor B with locked in pricing for the term you feel is most appropriate for you. Then we do the complete analysis on the training and migration required.

Step Four: Competitive displacement of a high use product or a broader provider consolidation. When Steps One through Three do not work we need to consider this step. Admittedly, this is a tough sell to the audience of the product – but if the financials support the acquisition of the tool, the implementation of the tool, and the training required– this research is certainly warranted.

Further, when the marketplace tells us that providers are significantly escalating renewal rates, we owe it to our clients to try to get out in front of the contract cycle to create options for them. We have seen clients work for two years to migrate away from one suite to another. A nine-month contract negotiation works for the provider – not for our clients.

Framing the alternatives

In addition to listening to our clients and monitoring the practices of the providers, GTSG is constantly listening to the marketplace for mainframe announcements We utilize our relationships with Gartner, Forrester, and other industry analysts in support of this practice.

GTSG exercises our operational expertise and knowledge of IBM z pricing systems within the structure of a methodology provided by Gartner. One specific problem we are address is the lack of transparency in many agreements due to extensive bundling.

Our market intelligence also tells us that many clients don’t take advantage of cross brand allotments (CBA) or tailored fit pricing.

How we help: negotiation preparation and assistance

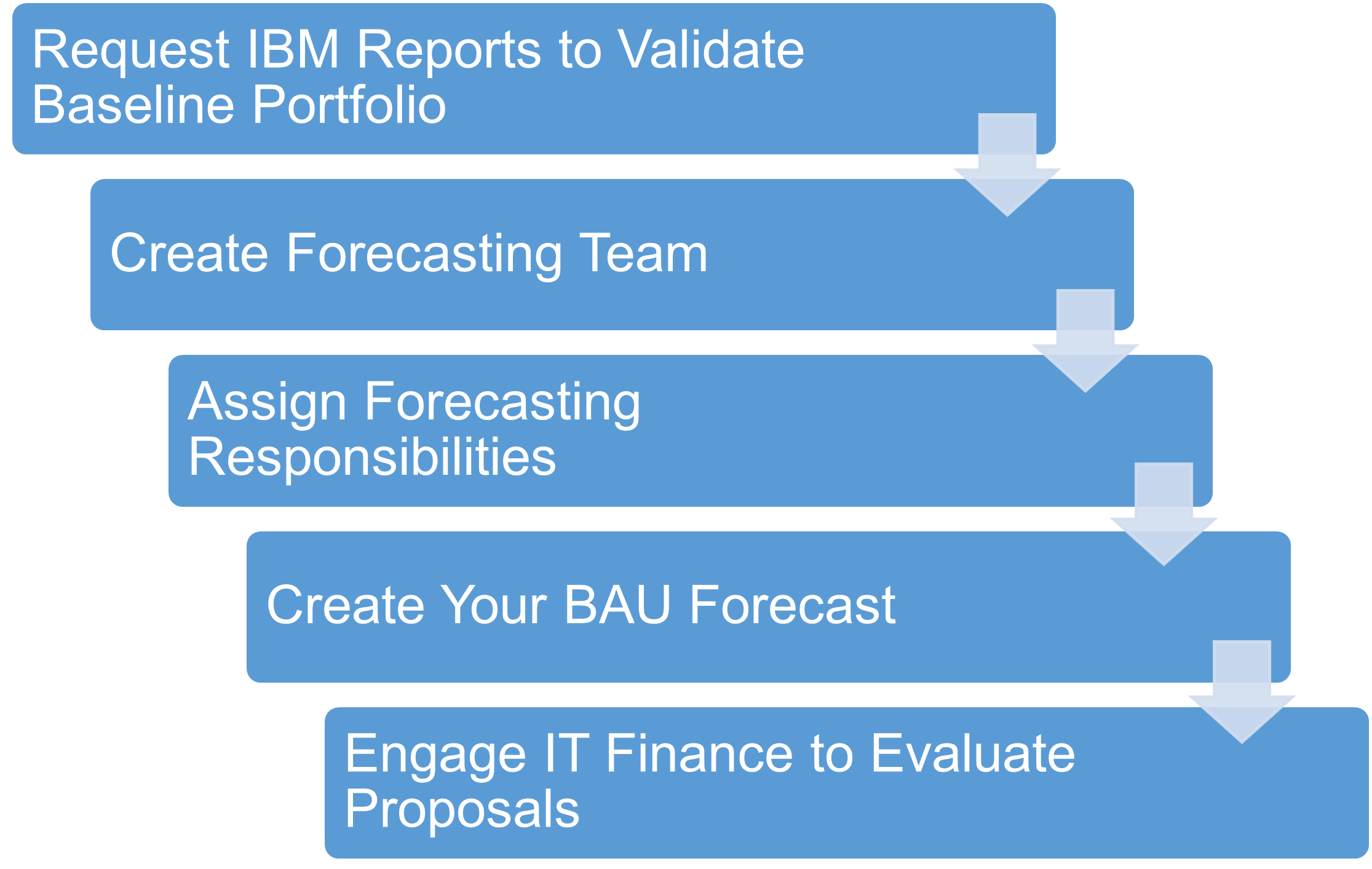

When engaged in negotiations, whether face to face with the provider or in negotiation prep, we execute within the structure of the Gartner’s five-step model depicted here:

Request IBM Reports: we request for the MLC Workload Pricer, with model and MSU detail, software PIDs; MLC models, pricing options for all subcapacity levels, what-if capacity change reports, etc. We also request the IPLA Workload Pricer, understand eligibility for subcapacity; request subcapacity “what-if” analysis, understand pricing options and validate entitled pricing.

Create Forecasting Team: Best practice is to include in this team representatives from procurement, contracts management, operations, capacity management, applications, enterprise architecture, and finance, as applicable in a given organization. GTSG would participate in this team as it set priorities for analysis based on changes in demand and the negotiation schedule for new license agreements.

Assign Forecasting Responsibilities, and

Create BAU Forecasts: We want to understand dividends from technology upgrades, MLC and zOTC MSU forecasts, conversions from ISV to IBM, IFL, and all viable pricing model options; LPAR changes and specialty engine opportunities (zIIP, IFL), and platform changes.

Engage IT Finance to Evaluate Proposals: GTSG will collaborate with a client to evaluate alternative pricing models.

GTSG has supported the mainframe for the past 33 years and our team will continue to do so for the next 33.

If we can help you, please reach out to us at 877.467.9885 or email us at mainframe@gtsg.com.