Issues and Considerations When Migrating Workload Away From the Mainframe

Introduction

This is not going to be one of those papers which argues that the mainframe is the right answer for everything, or that disaster meets everyone looking to migrate away from it. Obviously, many organizations have already done so, and many of these have been eager to share their success story.

Of the mainframe shops that remain, many, if not most, will grow workload.

In fairness, some “getting off the mainframe” success stories consist of basic function still resident on IBMz but outsourced, so the dependency remains. This is most often the case when the authoritative data source remains on the mainframe.

Our own business contains a strong managed services business, which, we sometimes joke, includes firms in the ninth year of their five year plan to sunset the platform. Experience bears this out: one recent client with a very large footprint engaged us to build a transition plan, in part to prepare for negotiations with their providers. When we got an understanding of the development plan, we concluded that the firm’s best case scenario was that 2025 MSU consumption would be reduced to “only” 2015 levels. In other words, a very large shop would remain a very large shop, but it would not get larger- so long as everything went right.

A long transition

Almost anyone would tell you that “big bang” modernization isn’t recommended in the 2020s. This leads to a protracted period of transition, which carries implications we’ll summarize as cost, complexity and competency.

Cost

Costs of transition

Whatever the cost associated with maintaining the mainframe, the costs of transitioning are significant as well. Costs of development, migration, the cost of the new platform, and associated hardware, facilities and or cloud fees are significant. There are also costs of running duplicate environments, as the “big bang” modernization has been shown to be ineffective.

Increased CPU burden

In addition, hardware costs may increase beyond what is anticipated due to the added CPU burden of development, testing, data translation, and performance-poor data access methods back to the mainframe database server. Nearly every client we’ve worked with is shocked by the pace at which it occurs and persists.

Software/hardware provider behavior

And of course, the provider will not let you “ease off” the platform – or stated a little differently, a unit of work removed does not equate to a unit of cost removed. Further, many tend to dismiss the requirement for duplicate licenses across the mainframe and “whatever” the target platform might be: for example, you’ve got to have a DBMS in both environments.

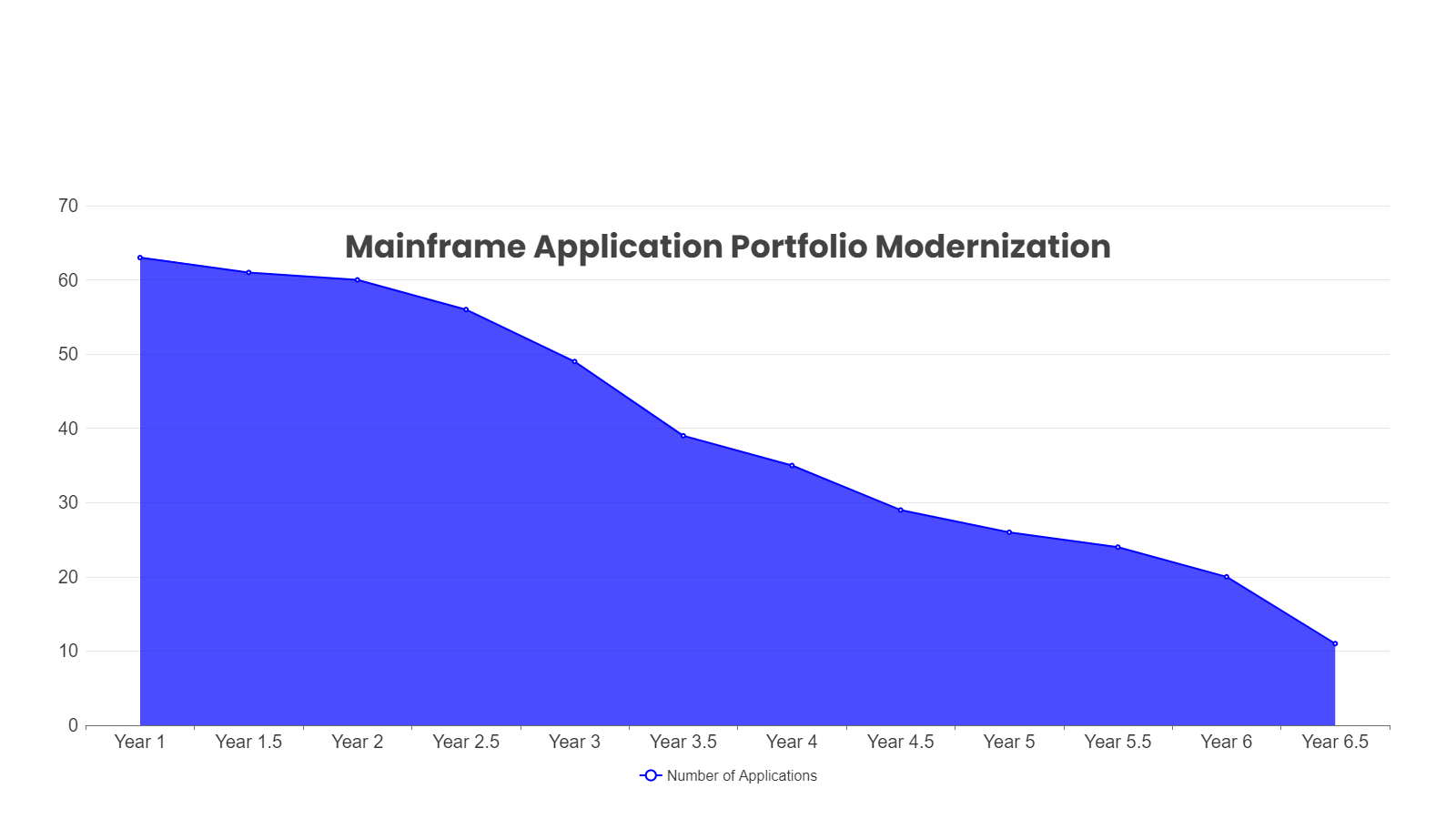

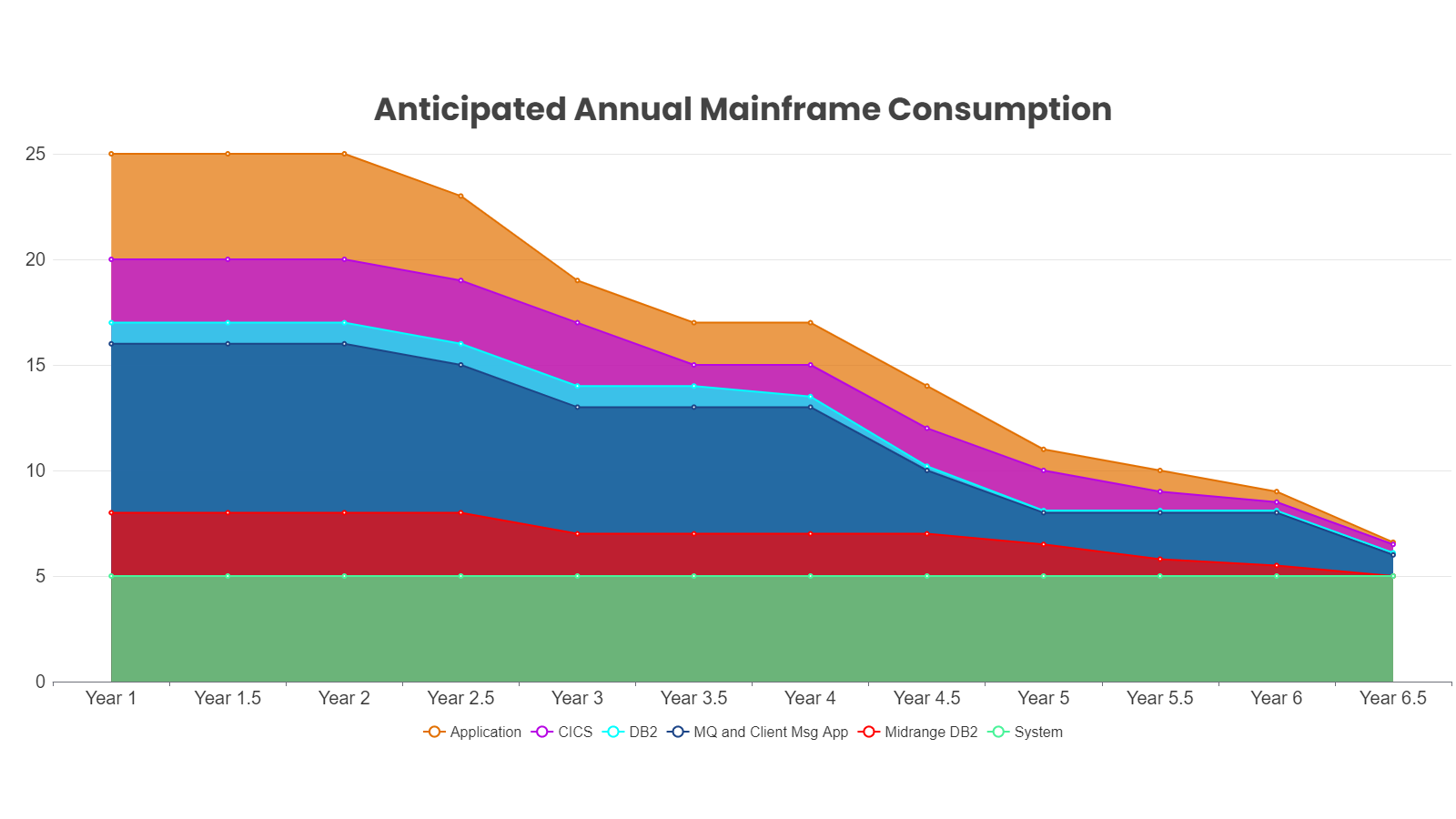

We did a study a number of years back for a firm committed to application modernization and eventual mainframe sunset. The chart below shows that even five years into the future, under the best case scenario, there was still workload whose ultimate destination was unknown. Given what we said about provider pricing strategy, there’s a substantial cost overlap for this five year .

At the conclusion of the modernization, capacity and software analysis, we can project requirements for input to negotiation

ISVs within the ecosystem are also looking to get what they can from a marketplace that

- on the one hand sees a decline in the total number of shops, but

- on the other hand, sees MSU growth in those shops retaining the mainframe.

Clients tell us that some vendors are more aggressive than others in capitalizing on what they see as lock-in that works to their advantage. And many of the ISVs don’t play- or don’t play well- in the sub-capacity environment – so it looks like you’re not saving anything – yet you’re burning cycles for the use of their product(s) while they continue to run in their BAU mode.

Complexity

Interim Interfaces

When it comes to a mainframe decommission effort, variety is not the spice of life. Our experience tells us that more success has definitely come from the more streamlined modernization efforts (in terms of application portfolio diversity)

Woe be the customer that has high tens or low hundreds of data source update interfaces. They can’t be converted all at once – so the mainframe remains the authoritative data source.

It’s not about just the applications: there are the backups/retention/etc. from years of being on the mainframe – and the large effort to “move them” to some other format that will live on for years years.

Latency

Latency implications must be considered if the refactored applications are in a cloud, or even a colocation facility geographically separate, and you’re returning to your mainframe for data.

Problem Resolution

Increased complexity in problem resolution –even old fashioned “finger-pointing” – manifests itself in even simple environments. The developers of the “new” applications are (usually) convinced their application is invincible, so the burden of proof is back on the mainframe folks.

Disaster Recovery

One element of complexity that certainly adds to cost is the need for a brand new disaster recovery plan, and the supporting tools and contracts. The mainframe’s one-stop recovery is gone, and resiliency must be rearchitected to match the new environment. (And while this is an absolute necessity, it is frequently missed in the feasibility assessment.)

Competence or Capability

Aging out of the workforce

A while ago, we heard from an industry analyst “For 15 years we’re hearing that the sky is falling. Well now, it really is falling!” Eventually, the mainframe resources who learned the technology before the client/server era were going to retire. Of those who remain, in this marketplace, the better resources are working, in some cases at a premium rate.

Capability lost in translation?

We heard just this week from a $24B company in the early stages of their decommission efforts. We heard (admittedly from infrastructure and operations folks) that “our old systems did everything we needed – we’re hearing that the new stuff doesn’t.” Whether they are sacrificing speed for function, or cultural transition to “off the shelf” is difficult, the entire venture is complicated, disruptive, and expensive. And at least in “some” cases, or for a season of time– it has reduced that precious functionality.

Currency of the platform, care for its efficient operation

Finally, outsourcers who “run/operate” rather than “manage/improve” lack either the skills or the incentives- or both- to continually optimize performance, or for that matter to keep the systems current. This carries significant cost and performance implications.

* * * * *

GTSG has supported the mainframe for the past 33 years and our team will continue to do so for the next 33.

If we can help you, please reach out to us at 877.467.9885 or email us at mainframe@gtsg.com.